In the last three posts, I looked at the entire slate of the 2025 Oscar-nominated movies from the perspective of three different communities: the Academy, the viewing public, and the professional critics.

The Academy of Motion Picture Arts and Sciences (AMPAS) is a rather elite group of less than 10,000 voting members who are all active in some way in making motion pictures. The Academy nominates movies for consideration and then votes on them, the results of which culminate in the ceremony, which, this year, is March 2, 2025. Of course, we don’t know for sure what the Academy thinks of these movies until the voting is announced. But we can get a general idea from the number and type of nominations they receive. I have constructed an index number that attempts, roughly, to quantify the perceived quality of a movie based strictly on the nomination patterns. That number is called the Oscar Quality Index, and it is described in more detail in my first essay of the year, The Initial Analysis.

Movies are made to inform and entertain the viewing public. So the audience constitutes the second most important group, and they aren’t afraid to tell us what they think of a movie. Their opinions are captured in the form of ratings of the movies, and, although there are multiple sources, I use two of them to create an audience rating. The raw numbers are adjusted to make them more comparable— because one rating is consistently higher than the other— and then averaged to come up with a rating. Based on the rating, the movies can then be ranked in order as the audience/public ranking. (It should be noted that these ratings were captured shortly after the nominations were announced and, therefore, do not reflect the effect of receiving a nomination. The ratings often change after Oscar season, and so the numbers I report at review time sometimes are different than the ones reported here.)

Finally, there are the professional critics who, for reasons that aren’t clear to me, think their opinions matter more than the viewing public’s, and they manage to get their opinions published somewhere. There are at least two numerical aggregations of these reviews, and I adjust and average them to create a critical rating number. I use that to rank the movies in order as the critics see them. Those ratings are subject to the same cautions mentioned above for public ratings.

The last two essays detail and compare the ratings and rankings of the public and the critics on two different sets of movies— the special interest films, which include the movies nominated in the Animated, Documentary, and International Feature categories, and the general interest films, which are those nominated in any of the other 17 categories. If the reader prefers more in-depth analysis than provided here, I refer them to the previous two posts, which dealt with each of these groups.

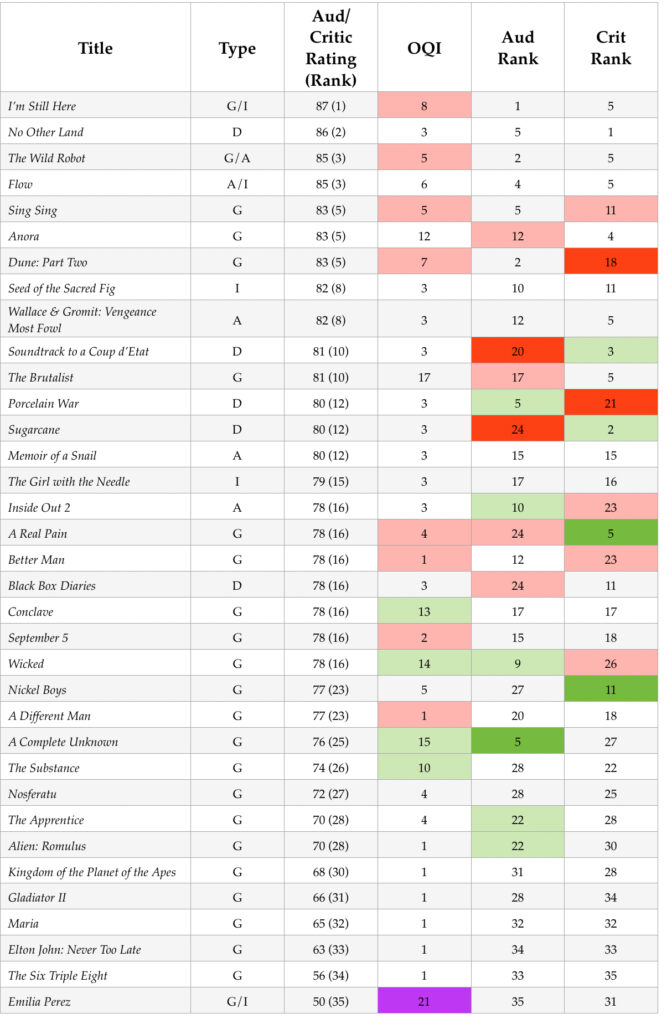

What remains is to summarize the ratings and rank order of all three of these communities to form a rank order of all 35 of this year’s nominated feature films. To do that, I take an average of the audience and critical ratings and then rank the films according to that number. This presents my initial ranking of these films, without having seen any of them. This information will inform my Oscar predictions, and then we will revisit these rankings after I have reviewed all of the films and see how well this metric compares to my actual experience with the movie.

Those numbers are all presented in the data table below. The films are listed in rank order based on the average of the audience and critical ratings, with the rating and (rank) shown in column 3. Column 2 is a type indicator with the following values (A(Animated), D(Documentary), I(International), and G(General Interest)). Column 4 is my OQI number, mentioned above and described in the first post. Column 5 is the ranking based on the audience rating number, and Column 6 is the ranking based on the critic rating number. The red and green shadings in the last three columns represent cases where that particular ranking or number is not quite in sync with the ranking presented in column 3. Red indicates movies where that particular group ranked the movie lower than the average rating, and green where that group ranked it higher. The intensity of the shading reflects the relative amount of disagreement. Purple means, well, see the discussion below about Emilia Perez! (I make all of this clearer in the discussion after the data table.)

There are several things that stand out from this data that I can see:

- Perhaps the most significant thing is the completely weird case of Emilia Perez. While both audience and critical ratings place this film at the very bottom of their rankings, the Academy awarded it with the most nominations, giving it the highest OQI. Frankly, I’ve never seen this before. Basically, the Academy, the filmmakers, think the best thing they made last year is the film both the viewing public and the critics think is the worst. I don’t know how to account for that. (Note: I’ve reviewed my rating numbers, and they have only gotten worse since I collected them a couple of weeks ago!)

- Another interesting point is how all of the Special Interest Movies ended up in the top half of the overall rankings. My guess why that is true is the same reason I assign them to their own groupings – people who watch Animated, Documentary, and International features aren’t exactly the same people who watch general interest films. And so their ratings employ a different conceptual take on a movie. Documentary lovers, for instance, are usually not the same people who would also rate Gladiator II really high, if they even choose to watch it. Animated films, often, are reserved for family-oriented viewers, and they may not want to see bodies decapitated either! So these viewers rate their movies higher. There are also, usually, many fewer viewers of special interest films than the general interest movies.

- Documentaries also show a pattern where the viewing public is less enamored of a film than the critics. Three of the documentaries show that pattern.

- In another documentary (Porcelain War), the pattern was reversed, with the viewing public rating the film significantly higher than the critics did. Since this is the exception to the rule in this category, it should be an interesting movie.

- Inside Out 2 is a special case in the Animated category, where the viewing public liked the film somewhat better than the critics did, which is usually the case with animated films. The question that raises for me is why were the other four less satisfying to the general public?

- None of the remaining special interest movies seem to generate any significant disagreements, and the fact that our top four ranked films (I’m Still Here, No Other Land, The Wild Robot, and Flow) are Special Interest movies is a salient feature in this year’s nomination pattern.

- There are two movies the Academy seemed to overreward with nominations that our other two groups were less enthusiastic about: Conclave and The Substance. The question with these two is: what did the filmmakers see in these movies that no one else did?

- In two other films, the Academy seemed to side with the viewing public and not the critics: Wicked and A Complete Unknown. The question here is why did the critics view these films so unfavorably?

- In four films, the Academy seemed to under-reward compared to audience and critical consensus opinion: Those films were I’m Still Here, The Wild Robot, September 5, and A Different Man. Movies like these are often surprises and better than you expect them to be. (Note: in the case of the animated film The Wild Robot, there may not have been much more they could have nominated it for.)

- For three films, the Academy seemed to go more with the critics’ opinion than the viewing public, resulting in underrewarding those films: They were Sing Sing, Dune: Part Two, and Better Man. The question for these films, especially for Dune, is why audiences liked it so much better than the critics?

- Then there is A Real Pain, where Critics loved it, audiences not very much, and the Academy ends up kind of in the middle. A movie like this is a real test of the difference between the viewing public and the critic community.

- In two movies, Anora and The Brutalist, the Academy seemed to side more with the critics than the general public. Since the Academy often tends to side with the critics, the question here is what do they see in these films that the public is missing? Or do they put more meaning into these films than they deserve?

- Two other films that the Academy may have somewhat underrated compared to the general public are The Apprentice and Alien Romulus. Frankly, I’m not looking forward to the film about our supposed President, and the latest in the Alien franchise is likely to be even less horrifying than current events!

- Finally, there is one film that went in the opposite direction. Nickel Boys is a film critics liked very much, but the viewing public didn’t, and the Academy went more with the public. Why did critics think it was so special?

- The other 6 films not mentioned all tend to the bottom of the rankings, and there aren’t a whole lot of disagreements about why they should be there. So those films, all in our Minor Events viewing festival, towards the end of the year, most likely belong there.

In short, there are meaningful takeaways when you compare the Oscar nominees to how the real world views the movies. I’ve listed a few, and we’ll be getting back to them when I review these films individually.

Next up are my actual predictions for who will win each 2025 Oscar category and why!